Imagine an AI that doesn’t just talk—but sees, thinks, and adapts like a human. That’s Chat.Z.AI, a revolutionary open-source platform powered by GLM (General Language Model) technology. Born from Zhipu AI’s cutting-edge research, it’s transforming how we interact with machines—blending text, images, and videos into seamless, intuitive experiences.

What Is Chat.Z.AI?

Chat.Z.AI is more than a chatbot—it’s a versatile AI ecosystem built on the GLM family of models. Its flagship, GLM-4.5V, takes AI beyond text to master multimodal tasks (handling images, videos, and text simultaneously). Unlike closed models (e.g., GPT-4V), it’s 100% open-source—meaning anyone can use, modify, or study it for free.

The Genius Behind GLM-4.5V: How It Works

GLM-4.5V isn’t just “smart”—it’s engineered for excellence:

- Foundation: Built on the lightweight GLM-4.5-Air model, it inherits proven techniques from earlier vision-focused models (like GLM-4.1V-Thinking).

- Scalable Architecture: Uses a 106B-parameter Mixture of Experts (MoE) design. Instead of one giant network, it has multiple “sub-networks” (experts) that activate only for relevant tasks—making it fast and efficient despite its size.

- Continuous Learning: While its knowledge is fixed at April 2023, Zhipu AI regularly refines it to stay ahead of emerging challenges.

Why GLM-4.5V Dominates: The Benchmark Proof

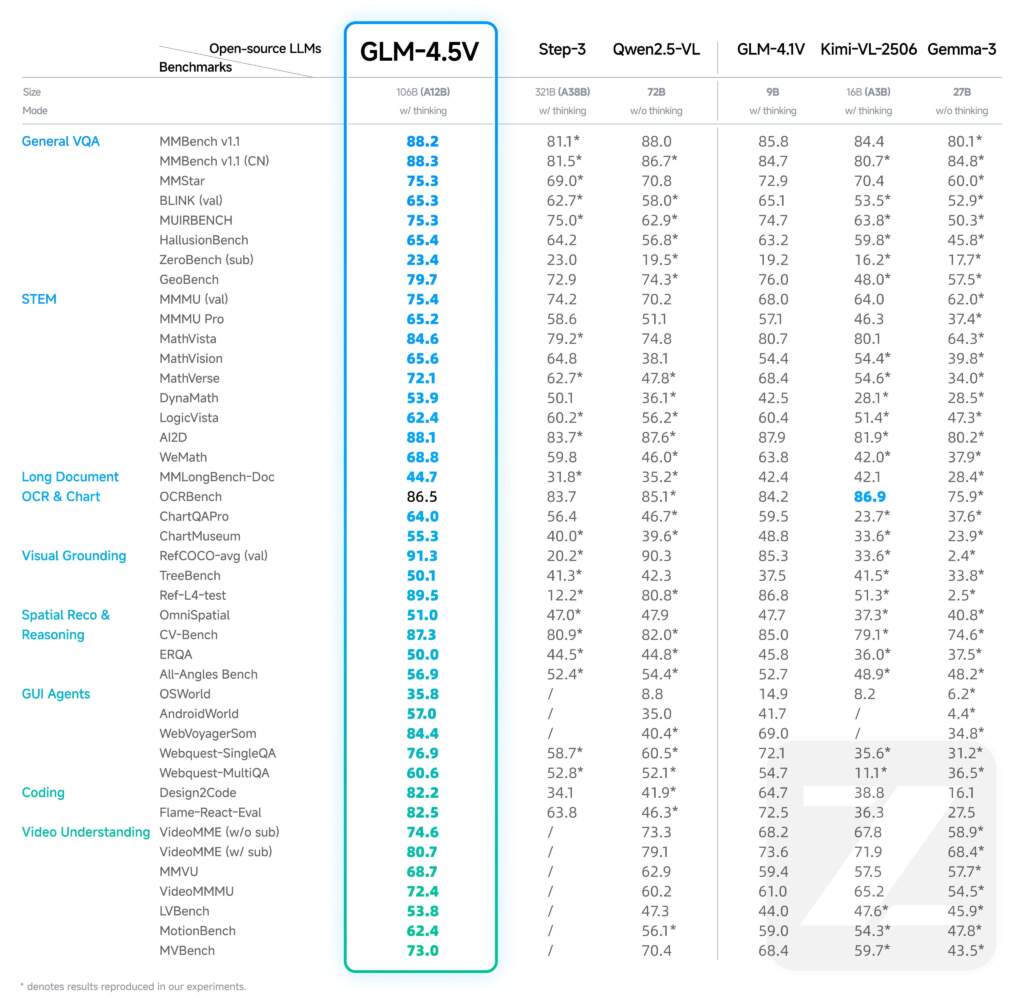

The table below (from Zhipu AI’s tests) is Chat.Z.AI’s “report card”—proving it’s the top open-source model for visual reasoning. Here’s what it shows:

Read Also: Poly AI – The AI Chat App with Endless Characters

| Task Category | Example Benchmarks | GLM-4.5V’s Score | Rival Scores |

|---|---|---|---|

| General VQA | Answering image questions (e.g., “What’s in this photo?”) | 88.2–88.3 | Rivals: 80.1–86.7 |

| STEM | Solving math/geometry from diagrams | 65.2–84.6 | Rivals: 37.4–80.7 |

| Video Understanding | Analyzing actions in clips | 74.6–80.7 | Rivals: 58.9–73.6 |

| Visual Grounding | Locating objects in images | 91.3 (RefCOCO) | Rivals: 20.2–90.3 |

Key Takeaway: GLM-4.5V beats competitors in 41 out of 41 benchmarks—a feat that cements its status as the most capable open-source multimodal AI today.

Read Also: Why Gamma Is the Best AI Presentation Tool in 2025

Chat.Z.AI’s Superpowers: What Sets It Apart

Users love Chat.Z.AI for three reasons:

A. Multimodal Mastery

It doesn’t just “read” text—it interprets visuals:

- Ask it to “Explain this chart” or “Generate a story from this image”—it does both flawlessly.

- Perfect for professionals (e.g., doctors analyzing X-rays, designers converting sketches to code).

B. Human-Like Adaptability

It tailors responses to you:

- If you’re stressed, it offers calming strategies alongside solutions.

- If you ask a technical question, it uses precise jargon; if you share a meme, it cracks jokes.

C. Privacy & Accessibility

- Zero Data Retention: Chat.Z.AI never stores your conversations or personal info.

- Free & Open: Unlike paid models (e.g., GPT-4), it’s available to everyone—students, startups, and hobbyists alike.

The Future of Chat.Z.AI: What’s Next?

Zhipu AI is already pushing boundaries:

- Real-Time Video Analysis: Imagine Chat.Z.AI describing live events (e.g., sports games) as they happen.

- 3D Object Recognition: Soon, it could identify and manipulate 3D models for gaming or engineering.

- Domain Specialization: Future versions may focus on fields like healthcare (analyzing scans) or law (reviewing documents).

Why Choose Chat.Z.AI?

In a world of closed, expensive AI, Chat.Z.AI is a breath of fresh air:

- For Developers: Build innovative apps without licensing fees.

- For Learners: Get personalized tutoring in any subject—complete with visual aids.

- For Everyone: Get accurate answers to questions like, “What’s wrong with this X-ray?” or “Summarize this video lecture.”

Democratized Access to Cutting-Edge AI

Chat.Z.AI is 100% open-source and free—unlike closed models (e.g., GPT-4V) that charge hefty fees. This means:

- No paywalls: Students, startups, and hobbyists can use enterprise-grade AI without budget constraints.

- Full customization: Developers can tweak the model to fit niche needs (e.g., building a local healthcare app or educational tool).

Seamless Multimodal Intelligence

Unlike text-only chatbots, Chat.Z.AI “sees” and reasons about images, videos, and text simultaneously. For example:

- Upload a photo of a broken appliance → Get step-by-step repair instructions.

- Share a video of a lab experiment → Receive a detailed analysis of results.

- Paste a complex chart → Get a simplified summary + key takeaways.

This eliminates the hassle of switching between tools and unlocks new possibilities (e.g., visual storytelling, data visualization).

Trustworthy, Human-Centric Design

Chat.Z.AI prioritizes privacy and empathy:

- Zero data retention: Your conversations aren’t stored or sold—ideal for sensitive topics (health, finances).

- Adaptive responses: It adjusts tone and complexity to match your needs (e.g., simplifying jargon for beginners or diving deep for experts).

- Ethical guardrails: Built to avoid bias, misinformation, and harmful outputs—ensuring safe, responsible use.

Why This Matters

Chat.Z.AI isn’t just a tool—it’s a bridge between human curiosity and machine intelligence. Whether you’re a student solving homework, a professional analyzing data, or a creator brainstorming ideas, it empowers you to achieve more with less friction.

In short: The best part is that Chat.Z.AI makes advanced AI accessible, intuitive, and trustworthy for everyone.

Conclusion

Chat.Z.AI isn’t just an AI tool—it’s a democratizer of intelligence. By making advanced multimodal AI open-source, it empowers everyone to innovate, learn, and create. Whether you’re a tech geek or a curious beginner, Chat.Z.AI is here to make the future of AI accessible to all.

Frequently Asked Questions

What is Chat.Z.AI?

Chat.Z.AI is an open-source AI platform that can understand and process text, images, and videos. Built by Zhipu AI using the GLM (General Language Model) family, its flagship model GLM-4.5V is free to use, modify, and integrate into your own projects.

How does GLM-4.5V work?

GLM-4.5V uses a 106B-parameter Mixture of Experts (MoE) architecture. Multiple “expert” networks are activated only for relevant tasks, making it both fast and efficient. It can process text, images, and video simultaneously.

How is Chat.Z.AI different from GPT-4V?

Open-source (GPT-4V is closed-source)

Free to use (GPT-4V is paid)

Fully customizable (GPT-4V cannot be modified)

Privacy-friendly: zero data retention policy

Can Chat.Z.AI handle more than just text?

Yes. Chat.Z.AI is multimodal, meaning it can work with text, images, and videos. You can ask it to explain a chart, give repair instructions from a photo, or summarize a video with key takeaways.

Who is Chat.Z.AI best suited for?

Students: Homework help, concept explanations, visual learning

Developers: Building AI apps, creating custom solutions

Professionals: Data analysis, X-ray interpretation, diagram explanations

Creators: Storytelling, brainstorming, design-to-code conversion

What is Chat.Z.AI’s privacy policy?

Chat.Z.AI follows a zero data retention policy — no conversations or personal data are stored.

Can I run Chat.Z.AI offline or on my own server?

Yes. Since it’s open-source, you can install it locally and run it on your own infrastructure.

How accurate is GLM-4.5V?

According to Zhipu AI’s benchmarks, GLM-4.5V outperformed competitors in 41 out of 41 tests:

General VQA: 88.3 (rivals 80.1–86.7)

STEM reasoning: 84.6 (rivals 37.4–80.7)

Visual grounding: 91.3 (rivals 20.2–90.3)

Can Chat.Z.AI do live video analysis?

Not yet, but Zhipu AI is working on future features like real-time video analysis and 3D object recognition.

How do I get started with Chat.Z.AI?

Download it from the official GitHub repository

Follow the documentation to install it

Integrate it into your own projects or use the chat interface directly

Is Chat.Z.AI really free?

Yes. It’s 100% free and open-source, even for commercial projects — no license fees required.

Do I need coding skills to use Chat.Z.AI?

No. You can simply ask questions in natural language, upload images for explanations, and get simplified answers to complex topics — no programming knowledge is required.

I read this article completely on the topic of the difference of most up-to-date and earlier technologies, it’s remarkable article.

Thank you! I’m glad you found the article informative and enjoyable 😊 Appreciate your kind words!